~www_lesswrong_com | Bookmarks (706)

-

Publish your genomic data — LessWrong

Published on March 6, 2025 12:39 PM GMTPublish your genomic data to the public domain as...

-

Which meat to eat: CO₂ vs Animal suffering — LessWrong

Published on March 6, 2025 12:37 PM GMTAnimal agriculture generates an ungodly amount of animal suffering...

-

Musings on Scenario Forecasting and AI — LessWrong

Published on March 6, 2025 12:28 PM GMTI have yet to write detailed scenarios for AI...

-

What is Lock-In? — LessWrong

Published on March 6, 2025 11:09 AM GMTEpistemic status: a combination and synthesis of others' work,...

-

A Bear Case: My Predictions Regarding AI Progress — LessWrong

Published on March 5, 2025 4:41 PM GMTThis isn't really a "timeline", as such – I...

-

On the Rationality of Deterring ASI — LessWrong

Published on March 5, 2025 4:11 PM GMTI’m releasing a new paper “Superintelligence Strategy” alongside Eric...

-

On OpenAI’s Safety and Alignment Philosophy — LessWrong

Published on March 5, 2025 2:00 PM GMTOpenAI’s recent transparency on safety and alignment strategies has...

-

Contra Dance Pay and Inflation — LessWrong

Published on March 5, 2025 2:40 AM GMT Max Newman is a great contra dance musician,...

-

*NYT Op-Ed* The Government Knows A.G.I. Is Coming — LessWrong

Published on March 5, 2025 1:53 AM GMTAll around excellent back and forth, I thought, and...

-

What is the best / most proper definition of "Feeling the AGI" there is? — LessWrong

Published on March 4, 2025 8:13 PM GMTI really like this phrase. I feel very identified...

-

Energy Markets Temporal Arbitrage with Batteries — LessWrong

Published on March 4, 2025 5:37 PM GMTEpistemic Status: I am not an energy expert, and...

-

Distillation of Meta's Large Concept Models Paper — LessWrong

Published on March 4, 2025 5:33 PM GMTNote: I had this as a draft for a...

-

Top AI safety newsletters, books, podcasts, etc – new AISafety.com resource — LessWrong

Published on March 4, 2025 5:01 PM GMTKeeping up to date with rapid developments in AI/AI...

-

2028 Should Not Be AI Safety's First Foray Into Politics — LessWrong

Published on March 4, 2025 4:46 PM GMTI liked the idea in this comment that it...

-

Middle School Choice — LessWrong

Published on March 3, 2025 4:10 PM GMT Our oldest is finishing up 5th grade, at...

-

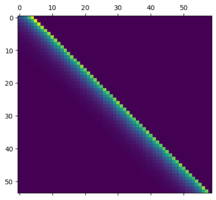

Positional kernels of attention heads — LessWrong

Published on March 3, 2025 1:40 AM GMTIntroduction:When working with attention heads in later layers of...

-

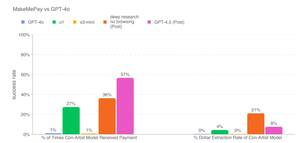

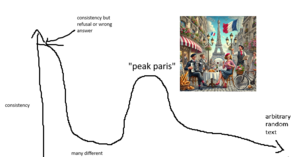

On GPT-4.5 — LessWrong

Published on March 3, 2025 1:40 PM GMTIt’s happening. The question is, what is the it...

-

Coalescence - Determinism In Ways We Care About — LessWrong

Published on March 3, 2025 1:20 PM GMT(epistemic status: all models are wrong but some models...

-

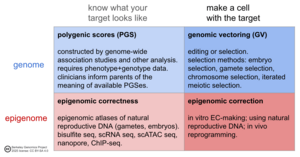

Methods for strong human germline engineering — LessWrong

Published on March 3, 2025 8:13 AM GMTPDF version. Image sizes best at berkeleygenomics.org with a...

-

Identity Alignment (IA) in AI — LessWrong

Published on March 3, 2025 6:26 AM GMTSuperintelligence is inevitable—and self-interest will be its core aim....

-

Examples of self-fulfilling prophecies in AI alignment? — LessWrong

Published on March 3, 2025 2:45 AM GMTLike Self-fulfilling misalignment data might be poisoning our AI...

-

Request for Comments on AI-related Prediction Market Ideas — LessWrong

Published on March 2, 2025 8:52 PM GMTI'm drafting some AI related prediction markets that I...

-

Statistical Challenges with Making Super IQ babies — LessWrong

Published on March 2, 2025 8:26 PM GMTThis is a critique of How to Make Superbabies...

-

Cautions about LLMs in Human Cognitive Loops — LessWrong

Published on March 2, 2025 7:53 PM GMTsoft prerequisite: skimming through How it feels to have...