~www_lesswrong_com | Bookmarks (706)

-

Less Laptop Velcro — LessWrong

Published on February 9, 2025 3:30 AM GMT A year ago I broke my laptop screen,...

-

AXRP Episode 38.7 - Anthony Aguirre on the Future of Life Institute — LessWrong

Published on February 9, 2025 1:10 AM GMTYouTube link The Future of Life Institute is one...

-

[Job ad] LISA CEO — LessWrong

Published on February 9, 2025 12:18 AM GMTOverviewJob Title: Chief Executive OfficerCompany Name: London Initiative for...

-

Goals don't necesserily start to crystallize the moment AI is capable enough to fake alignment — LessWrong

Published on February 8, 2025 11:44 PM GMT(A very short post to put the thoughts out...

-

How AI Takeover Might Happen in 2 Years — LessWrong

Published on February 7, 2025 5:10 PM GMTI’m not a natural “doomsayer.” But unfortunately, part of...

-

On the Meta and DeepMind Safety Frameworks — LessWrong

Published on February 7, 2025 1:10 PM GMTThis week we got a revision of DeepMind’s safety...

-

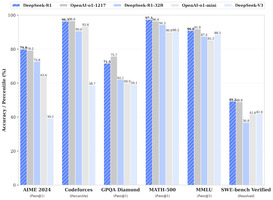

Illusory Safety: Redteaming DeepSeek R1 and the Strongest Fine-Tunable Models of OpenAI, Anthropic, and Google — LessWrong

Published on February 7, 2025 3:57 AM GMTDeepSeek-R1 has recently made waves as a state-of-the-art open-weight...

-

When you downvote, explain why — LessWrong

Published on February 7, 2025 1:03 AM GMTBeing a newcomer and having your post downvoted can...

-

Medical Windfall Prizes — LessWrong

Published on February 6, 2025 11:33 PM GMTSummary AI may produce a windfall surge in government...

-

Do No Harm? Navigating and Nudging AI Moral Choices — LessWrong

Published on February 6, 2025 7:18 PM GMTTL;DR: How do AI systems make moral decisions, and...

-

Open Philanthropy Technical AI Safety RFP - $40M Available Across 21 Research Areas — LessWrong

Published on February 6, 2025 6:58 PM GMTOpen Philanthropy is launching a big new Request for Proposals...

-

AISN #47: Reasoning Models — LessWrong

Published on February 6, 2025 6:52 PM GMTWelcome to the AI Safety Newsletter by the Center...

-

Wild Animal Suffering Is The Worst Thing In The World — LessWrong

Published on February 6, 2025 4:15 PM GMTCrossposted from my blog which many people are saying...

-

Detecting Strategic Deception Using Linear Probes — LessWrong

Published on February 6, 2025 3:46 PM GMTCan you tell when an LLM is lying from...

-

Alignment Paradox and a Request for Harsh Criticism — LessWrong

Published on February 5, 2025 6:17 PM GMTI’m not a scientist, engineer, or alignment researcher in...

-

Introducing International AI Governance Alliance (IAIGA) — LessWrong

Published on February 5, 2025 4:02 PM GMTThe International AI Governance Alliance (IAIGA) is a new...

-

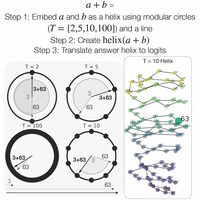

Language Models Use Trigonometry to Do Addition — LessWrong

Published on February 5, 2025 1:50 PM GMTI (Subhash) am a Masters student in the Tegmark...

-

Reviewing LessWrong: Screwtape's Basic Answer — LessWrong

Published on February 5, 2025 4:30 AM GMTYeah I put this off until the last day,...

-

Journalism student looking for sources — LessWrong

Published on February 4, 2025 6:58 PM GMTHello Lesswrong community,I am a journalism student doing my...

-

Nick Land: Orthogonality — LessWrong

Published on February 4, 2025 9:07 PM GMTEditor's note Due to the interest aroused by @jessicata's posts...

-

Subjective Naturalism in Decision Theory: Savage vs. Jeffrey–Bolker — LessWrong

Published on February 4, 2025 8:34 PM GMTSummary:This post outlines how a view we call subjective...

-

Anti-Slop Interventions? — LessWrong

Published on February 4, 2025 7:50 PM GMTIn his recent post arguing against AI Control research,...

-

We’re in Deep Research — LessWrong

Published on February 4, 2025 5:20 PM GMTThe latest addition to OpenAI’s Pro offerings is their...

-

The Capitalist Agent — LessWrong

Published on February 4, 2025 3:32 PM GMTWith the ongoing evolutions in “artificial intelligence”, of course...