~www_lesswrong_com | Bookmarks (702)

-

Keltham's Lectures in Project Lawful — LessWrong

Published on April 1, 2025 10:39 AM GMT(Not an April fools' joke)If anyone wants to return...

-

Was the historical Jesus talking about evolution? (You might be surprised) — LessWrong

Published on April 1, 2025 10:32 AM GMTOut of all the research rabbit holes I've ever...

-

Follow me on TikTok — LessWrong

Published on April 1, 2025 8:22 AM GMTFor more than five years, I've posted an average...

-

New Cause Area Proposal — LessWrong

Published on April 1, 2025 7:12 AM GMTEpistemic status - statistically verified. I'm writing this post to...

-

Why do many people who care about AI Safety not clearly endorse PauseAI? — LessWrong

Published on March 30, 2025 6:06 PM GMTtl;dr:From my current understanding, one of the following two...

-

Extracting proper nouns a model "knows" using entity-detection neurons. — LessWrong

Published on March 30, 2025 4:58 PM GMTIntroductionResearch on Sparse Autoencoders (SAEs) has identified "known entity"...

-

The g-Zombie Formal Argument — LessWrong

Published on March 30, 2025 1:16 PM GMTNote: I created an entry highlighting the formal attack...

-

Memory Persistence within Conversation Threads with Multimodal LLMS — LessWrong

Published on March 30, 2025 7:16 AM GMTIn neuroscience, we learned about foveated vision — our...

-

How I talk to those above me — LessWrong

Published on March 30, 2025 6:54 AM GMTNow and then, at work, we’ll have a CEO...

-

I, G(Zombie) — LessWrong

Published on March 30, 2025 1:24 AM GMTThere is no such thing as philosophy-free science; there...

-

Exercising Rationality — LessWrong

Published on March 29, 2025 7:08 PM GMTOr: Why thinking about blue tentacle arms is not...

-

Climbing the Hill of Experiments — LessWrong

Published on March 29, 2025 8:37 PM GMTA better anything can be achieved with simple tests...

-

Does the AI control agenda broadly rely on no FOOM being possible? — LessWrong

Published on March 29, 2025 7:38 PM GMTFor the purposes of FOOM, I'm defining it as...

-

Yeshua's Basilisk — LessWrong

Published on March 29, 2025 6:11 PM GMTSuppose you’re an AI researcher trying to make AIs...

-

40 - Jason Gross on Compact Proofs and Interpretability — LessWrong

Published on March 28, 2025 6:40 PM GMTYouTube link How do we figure out whether interpretability...

-

AI x Bio Workshop — LessWrong

Published on March 28, 2025 5:21 PM GMTMay 9 & 10Lighthaven, Berkeley Hosted by The Longevity...

-

How many times faster can the AGI advance the science than humans do? — LessWrong

Published on March 28, 2025 3:16 PM GMTI hope that the reasoning in my two posts...

-

Gemini 2.5 is the New SoTA — LessWrong

Published on March 28, 2025 2:20 PM GMTGemini 2.5 Pro Experimental is America’s next top large...

-

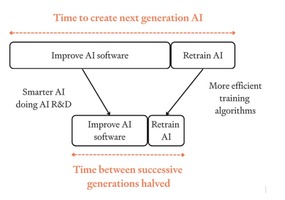

Will the Need to Retrain AI Models from Scratch Block a Software Intelligence Explosion? — LessWrong

Published on March 28, 2025 2:12 PM GMTTl;dr: no.This is a rough research note – we’re...

-

How We Might All Die in A Year — LessWrong

Published on March 28, 2025 1:22 PM GMTIlya thought back again to when he’d overheard that...

-

The vision of Bill Thurston — LessWrong

Published on March 28, 2025 11:45 AM GMTPDF version. berkeleygenomics.org. X.com. Bluesky. William Thurston was a...

-

What Uniparental Disomy Tells Us About Improper Imprinting in Humans — LessWrong

Published on March 28, 2025 11:24 AM GMTTLDRWe do not yet understand all the genetic imprints...

-

Explaining British Naval Dominance During the Age of Sail — LessWrong

Published on March 28, 2025 5:47 AM GMTThe other day I discussed how high monitoring costs...

-

Will the AGIs be able to run the civilisation? — LessWrong

Published on March 28, 2025 4:50 AM GMTEven an AGI "aligned" to a purpose which doesn't...