~www_lesswrong_com | Bookmarks (706)

-

Non-Consensual Consent: The Performance of Choice in a Coercive World — LessWrong

Published on March 20, 2025 5:12 PM GMTCrossposted from Substack Consider the following scenario: You're sitting in...

-

Everything's An Emergency — LessWrong

Published on March 20, 2025 5:12 PM GMTCrosspost of an article on my totes amazing blog...

-

Why am I getting downvoted on Lesswrong? — LessWrong

Published on March 19, 2025 6:32 PM GMTI was first introduced to Lesswrong about 6 months...

-

How Do We Govern AI Well? — LessWrong

Published on March 19, 2025 5:08 PM GMTThis is the first essay in my series How...

-

Forecasting AI Futures Resource Hub — LessWrong

Published on March 19, 2025 5:26 PM GMTLike a collector, I gather resources and information. Storing...

-

TBC episode w Dave Kasten from Control AI on AI Policy — LessWrong

Published on March 19, 2025 5:09 PM GMTIn our latest episode Dave Kasten joins us to...

-

Prioritizing threats for AI control — LessWrong

Published on March 19, 2025 5:09 PM GMTWe often talk about ensuring control, which in the...

-

METR: Measuring AI Ability to Complete Long Tasks — LessWrong

Published on March 19, 2025 4:00 PM GMTSummary: We propose measuring AI performance in terms of...

-

The principle of genomic liberty — LessWrong

Published on March 19, 2025 2:27 PM GMTPDF version. berkeleygenomics.org. Twitter thread. (Bluesky copy.) Summary The...

-

Going Nova — LessWrong

Published on March 19, 2025 1:30 PM GMTThere is an attractor state where LLMs exhibit the...

-

Equations Mean Things — LessWrong

Published on March 19, 2025 8:16 AM GMTI asserted that this forum could do with more...

-

Elite Coordination via the Consensus of Power — LessWrong

Published on March 19, 2025 6:56 AM GMTThis post is about how implicit coordination between powerful...

-

Things Look Bleak for White-Collar Jobs Due to AI Acceleration — LessWrong

Published on March 17, 2025 5:03 PM GMTReason this post exists: I’m a layman when it...

-

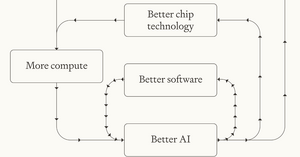

Three Types of Intelligence Explosion — LessWrong

Published on March 17, 2025 2:47 PM GMTAbstractOnce AI systems can design and build even more...

-

An Advent of Thought — LessWrong

Published on March 17, 2025 2:21 PM GMT I was intending to write and post one...

-

Interested in working from a new Boston AI Safety Hub? — LessWrong

Published on March 17, 2025 1:42 PM GMTTL;DR: Submit your expression of interest for working at a...

-

Other Civilizations Would Recover 84+% of Our Cosmic Resources - A Challenge to Extinction Risk Prioritization — LessWrong

Published on March 17, 2025 1:12 PM GMTCrossposted on the EA Forum.We introduce a first evaluation...

-

Monthly Roundup #28: March 2025 — LessWrong

Published on March 17, 2025 12:50 PM GMTI plan to continue to leave the Trump administration...

-

Are corporations superintelligent? — LessWrong

Published on March 17, 2025 10:36 AM GMTThis is an article in the featured articles series...

-

The Case for AI Optimism — LessWrong

Published on March 17, 2025 1:29 AM GMTThis is a very comprehensive article sharing what could...

-

Notable utility-monster-like LLM failure modes on Biologically and Economically aligned AI safety benchmarks for LLMs with simplified observation format — LessWrong

Published on March 16, 2025 11:23 PM GMTBy Roland Pihlakas, Sruthi Kuriakose, Shruti Datta GuptaSummary and...

-

Read More News — LessWrong

Published on March 16, 2025 9:31 PM GMTTLDR: Instead of scrutinizing minor errors, ask what process...

-

The Fork in the Road — LessWrong

Published on March 15, 2025 5:36 PM GMTtl;dr: We will soon be forced to make a...

-

Any-Benefit Mindset and Any-Reason Reasoning — LessWrong

Published on March 15, 2025 5:10 PM GMTOne concept from Cal Newport's Deep Work that has stuck...