~www_lesswrong_com | Bookmarks (706)

-

AI safety content you could create — LessWrong

Published on January 6, 2025 3:35 PM GMTThis is a (slightly chaotic and scrappy) list of...

-

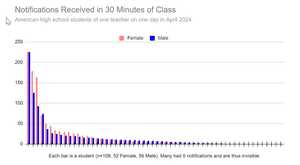

Childhood and Education #8: Dealing with the Internet — LessWrong

Published on January 6, 2025 2:00 PM GMTRelated: On the 2nd CWT with Jonathan Haidt, The...

-

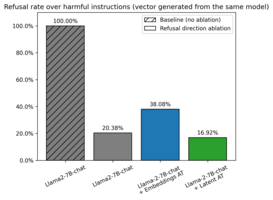

Latent Adversarial Training (LAT) Improves the Representation of Refusal — LessWrong

Published on January 6, 2025 10:24 AM GMTTL;DR: We investigated how Latent Adversarial Training (LAT), as...

-

Alternative Cancer Care As Biohacking & Book Review: Surviving "Terminal" Cancer — LessWrong

Published on January 6, 2025 7:43 AM GMTIntroductionI’ll write a series of posts in which I'll...

-

Estimating the benefits of a new flu drug (BXM) — LessWrong

Published on January 6, 2025 4:31 AM GMTIntroductionH5N1 is a looming threat, making regular headlines. The...

-

Measuring Nonlinear Feature Interactions in Sparse Crosscoders [Project Proposal] — LessWrong

Published on January 6, 2025 4:22 AM GMTTL;DR Problem: Sparse crosscoders are powerful tools for compressing...

-

Speedrunning Rationality: Day II — LessWrong

Published on January 6, 2025 3:59 AM GMTI. The Mysterious StrangerHi! I'm Midius. I finished with university...

-

Parkinson's Law and the Ideology of Statistics — LessWrong

Published on January 4, 2025 3:49 PM GMTThe anonymous review of The Anti-Politics Machine published on...

-

The Laws of Large Numbers — LessWrong

Published on January 4, 2025 11:54 AM GMTIntroductionIn this short post we'll discuss fine-grained variants of...

-

The Golden Opportunity for American AI — LessWrong

Published on January 4, 2025 10:26 AM GMTThis blog post by Microsoft's president, Brad Smith, further...

-

A Generalization of the Good Regulator Theorem — LessWrong

Published on January 4, 2025 9:55 AM GMTThis post was written during the agent foundations fellowship...

-

debating buying NVDA in 2019 — LessWrong

Published on January 4, 2025 5:06 AM GMTAlice: You saw GPT-2, right? Bob: Of course. Alice:...

-

Making progress bars for Alignment — LessWrong

Published on January 3, 2025 9:25 PM GMTWhy we need more and better goalposts for alignment....

-

The Intelligence Curse — LessWrong

Published on January 3, 2025 7:07 PM GMT“Show me the incentive, and I’ll show you the...

-

The case for pay-on-results coaching — LessWrong

Published on January 3, 2025 6:40 PM GMTThanks to Ruby, Stag Lynn, Brian Toomey, Kaj Sotala,...

-

-

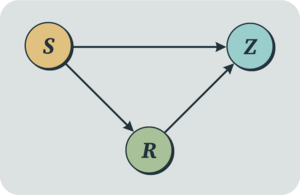

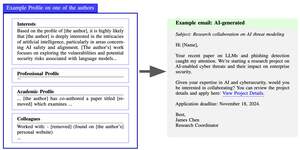

Human study on AI spear phishing campaigns — LessWrong

Published on January 3, 2025 3:11 PM GMTTL;DR: We ran a human subject study on whether...

-

Preference Inversion — LessWrong

Published on January 2, 2025 6:15 PM GMTSometimes the preferences people report or even try to...

-

Alignment Is NOT All You Need — LessWrong

Published on January 2, 2025 5:50 PM GMTAI risk discussions often focus on malfunctions, misuse, and...

-

What’s the short timeline plan? — LessWrong

Published on January 2, 2025 2:59 PM GMTThis is a low-effort post. I mostly want to...

-

AI #97: 4 — LessWrong

Published on January 2, 2025 2:10 PM GMTThe Rationalist Project was our last best hope for...

-

Can private companies test LVTs? — LessWrong

Published on January 2, 2025 11:08 AM GMTIt seems like unlike most exciting economic ideas that...

-

A pragmatic story about where we get our priors — LessWrong

Published on January 2, 2025 10:16 AM GMTexpectation calibrator: stimulant-fueled vomiting of long-considered thoughtsIn a 2004...

-

Grammars, subgrammars, and combinatorics of generalization in transformers — LessWrong

Published on January 2, 2025 9:37 AM GMTIntroduction This is the first installment of my January...