~www_lesswrong_com | Bookmarks (706)

-

2025 Alignment Predictions — LessWrong

Published on January 2, 2025 5:37 AM GMTI’m curious how alignment researchers would answer these two...

-

Grading my 2024 AI predictions — LessWrong

Published on January 2, 2025 5:01 AM GMTOn Jan 8 2024, I wrote a Google doc...

-

Practicing Bayesian Epistemology with "Two Boys" Probability Puzzles — LessWrong

Published on January 2, 2025 4:42 AM GMTThe PuzzlesThere's a simple Monty Hall adjacent probability puzzle...

-

DeekSeek v3: The Six Million Dollar Model — LessWrong

Published on December 31, 2024 3:10 PM GMTWhat should we make of DeepSeek v3? DeepSeek v3...

-

I Recommend More Training Rationales — LessWrong

Published on December 31, 2024 2:06 PM GMTSome time ago I happened to read the concept...

-

The Plan - 2024 Update — LessWrong

Published on December 31, 2024 1:29 PM GMTThis post is a follow-up to The Plan - 2023...

-

Zombies among us — LessWrong

Published on December 31, 2024 5:14 AM GMT I met a man in the Florida Keys...

-

Two Weeks Without Sweets — LessWrong

Published on December 31, 2024 3:30 AM GMT I recently tried giving up sweets for two...

-

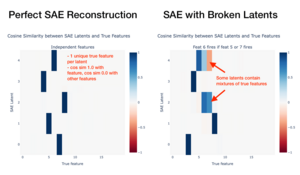

Broken Latents: Studying SAEs and Feature Co-occurrence in Toy Models — LessWrong

Published on December 30, 2024 10:50 PM GMTThanks to Jean Kaddour, Tomáš Dulka, and Joseph Bloom...

-

Genetically edited mosquitoes haven't scaled yet. Why? — LessWrong

Published on December 30, 2024 9:37 PM GMTA post on difficulty of eliminating malaria using gene...

-

The low Information Density of Eliezer Yudkowsky & LessWrong — LessWrong

Published on December 30, 2024 7:43 PM GMTTLDR:I think Eliezer Yudkowsky & many posts on LessWrong...

-

Linkpost: Look at the Water — LessWrong

Published on December 30, 2024 7:49 PM GMTThis is a linkpost for https://jbostock.substack.com/p/prologue-train-crashEpistemic status: fiction, satire...

-

o3, Oh My — LessWrong

Published on December 30, 2024 2:10 PM GMTOpenAI presented o3 on the Friday before Christmas, at...

-

Could my work, "Beyond HaHa" benefit the LessWrong community? — LessWrong

Published on December 29, 2024 4:14 PM GMTI’m considering translating my work into English to share...

-

Book Summary: Zero to One — LessWrong

Published on December 29, 2024 4:13 PM GMTSummary. Zero to one is a collection of notes...

-

Boston Solstice 2024 Retrospective — LessWrong

Published on December 29, 2024 3:40 PM GMT Last night was the ninth Boston Secular Solstice...

-

Some arguments against a land value tax — LessWrong

Published on December 29, 2024 3:17 PM GMTTo many people, the land value tax (LVT) has...

-

Predictions of Near-Term Societal Changes Due to Artificial Intelligence — LessWrong

Published on December 29, 2024 2:53 PM GMTThe intended audience of this post is not the...

-

Considerations on orca intelligence — LessWrong

Published on December 29, 2024 2:35 PM GMTFollow up to: Could orcas be smarter than humans?(For...

-

The Legacy of Computer Science — LessWrong

Published on December 29, 2024 1:15 PM GMT "Computer Science" is not a science, and its...

-

Shallow review of technical AI safety, 2024 — LessWrong

Published on December 29, 2024 12:01 PM GMTfrom aisafety.world The following is a list of live agendas in...

-

Dishbrain and implications. — LessWrong

Published on December 29, 2024 10:42 AM GMTI believe that AI research has not given sufficient...

-

Notes on Altruism — LessWrong

Published on December 29, 2024 3:13 AM GMTThis post examines the virtue of altruism. I’m less...

-

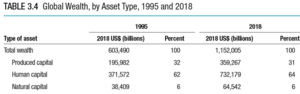

By default, capital will matter more than ever after AGI — LessWrong

Published on December 28, 2024 5:52 PM GMTI've heard many people say something like "money won't...